I've had many different development platforms over the years - from Notepad++ on library computers in my youth, to Gentoo and then Ubuntu installed on a series of carefully-chosen laptops with working drivers, and then for the last five years or so on Surface devices via the rather wonderful Windows Subsystem for Linux (WSL).

Of course, in the WSL era I am still just running Ubuntu, but inside the pseudo-VM that is the WSL subsystem of the Windows kernel. It's honestly pretty great, and I regularly joke that I'm using Windows as the GUI layer to develop on Linux.

Between the Steam Deck and WSL both being ascendant, maybe we finally got the Year Of Linux On The Desktop, just not as we expected.

As good as my local development environment has gotten though, the problem I've often faced is that I want to develop things on three separate computers. My Surface Laptop Studio is my main, powerful machine, and lives docked in my home office. It's portable, but it's also relatively big and heavy so I don't take it out to, say, relax on the sofa with.

My main machine for that is my Surface Pro 9 With 5G (what a mouthful) - in other words, the latest ARM Surface. It's small, light, portable, and easily does thirteen hours on a charge if you're just watching video or coding. WSL works pretty well under Windows ARM - you just run the ARM variant of the distribution you want - but it's still a separate device with its own state.

Lastly, I have a desktop in my workshop that I often want to use. My workshop is a separate industrial unit about five minutes from my house, so I often go there to have a change of scenery from just working at home. That doesn't even have a dev environment as it's mostly for CAD/CAM work; I've got a dock there that I can plug the SP9 into and then switch the keyboard and mouse over if I want to work from there, but it's a bit of a faff.

Of course, I could just accept that the Surface Laptop Studio is something I can haul around, but that's extra work, and the extra size and weight is especially unwelcome when I go on shorter trips, since I normally travel with just a backpack. It is so nice to just tote the Surface Pro 9 around, especially given the built-in 5G modem.

It's Never Just The Code

I've lived with this situation for a few years now, and in the last month I decided it was finally time to do something about it; the mental overhead this all added was making me code less in my spare time, as I dreaded figuring out which laptop had my working changes and the most current database on it.

You might say "but Andrew! Just commit it all to a Git branch every time!". And yes, you're right, that would help solve the "where are my changes" part of the equation, but the database is far more important - or even more, the incoming connections and routing.

Developing Takahē is a great example of this; a Fediverse server needs an inbound connection (with HTTPS!) to the rest of the internet, even for testing - unless you feel like running one of every other server on the same machine. I have a Tailscale pod running on the main Takahē Kubernetes cluster that forwards requests for a domain to my laptop, but it's fixed to that laptop, and the database on that machine is intricately tied into the domain and addressing as well.

This website is easier - I moved it over to be entirely YAML-powered a couple of years back (it just reads all the YAML into an in-memory SQLite database on boot so I get all my Django niceties still) - but as much as I know I should just commit things to Git all the time, I don't. I forget, or I don't know what to put for the commit message, or I get called away from the computer suddenly.

So, I not only have to move code between machines, but I have to keep a database synchronised with on-disk media, and also port around an incoming socket, and also make sure my Python virtualenvs all have the same packages constantly, plus a bunch of other stuff. Annoying, eh?

Other People's Computers

My first attempt to try and solve this was me finally trying out GitHub Codespaces - after all, it integrates well into my current editor of choice (VS Code), there's a free tier, and it seems to be generally well-regarded.

And yes, it works well, when your requirements are relatively simple. For this

blog it was trivial - launch the container, run the server, and the port

auto-forwards so it can be accessed via localhost. Great!

Then, I looked into what it would take to get a PostgreSQL server running - some docker-compose, sure, I deserve that (I co-wrote the tool that eventually became Docker Compose, and I think I was the one who decided on the basics of the YAML format, so - my apologies and you're welcome).

But then I realise that Codespaces is really oriented around reproducible, on demand development environments that don't really persist. Sure, you can set up a database and get some data in there, and it'll all save to disk and suspend nicely if you get the Docker volumes right, and it boots up again in about 20 seconds - but want to change the base image? Or reload your dotfiles repo? You need to blow away the Codespace and make a new one.

Now this is a great thing to enforce on a company with lots of developers - making development environments cattle rather than pets is perfect, and as long as you staff a developer tooling team appropriately, everyone's going to have a good time and onboard onto different projects quickly, and you can have scripts that generate example data or restore anonymised backups to fill out the database.

However, my developer tools team is just me, and maybe my cats if they feel like helping out by sitting on the desk and following the mouse. My development environments are pets rather than cattle, especially when I'm rapidly prototyping or doing weird experiments, as I don't have the certainty in my planning to invest time in making it all reproducible.

Codespaces was neat, and if I need to just fire up a web browser anywhere on earth and write a blog post, I have that bit all set up now. It wasn't going to work for my more complex projects, though - for that I'd have to look closer to home.

What Lies Beneath

I started my career as a sysadmin, and I still have a fondness for racking servers and doing the cable management just so. The only thing that might get me to eagerly go back into an office, apart from having an actual office rather than a desk in a giant open space, would be the promise of a giant control room with screens everywhere or racks to play with.

If you do want to offer me a job where I can somehow tech lead with access to a giant control room and/or lots of racks, you know where to find me.

However, given I don't currently have access to this, I opted for the second-best option - my basement. I already run a commercial-grade UniFi setup for my home wifi (it used to go out to North Bay Python and double as the conference wifi for a couple hundred people), and previously I had a Synology NAS sitting next to that which gave me around 20TB of storage across six disks, as well as a Plex server and Home Assistant running as services.

To me there's something better than nice, consumer-friendly devices, though - and that's commercial-grade rack hardware. Built-in lights-out management, easy to access and upgrade, diagrams on the inside of how to replace everything. It's how servers should be (though I still maintain that the old Sun servers were actually the nicest to work on and maintain - I miss the colour coding especially).

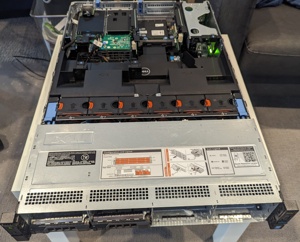

And, fortunately, there is a healthy secondary market for rack-mount servers, since the big customers mostly care about efficiency per watt (and these days, how many GPUs they can fit inside the chassis). After some searching through eBay and comparing various different brands and models, I ended up buying a Dell R730xd for $500 plus shipping.

This is a proper beast of a machine for $500 - twelve 3.5" drive bays, two 2.5" bays, two Xeon E5-2690 processors with 16 cores each, and 128GB of RAM. When it was new, these were the kinds of machines that Google built their Search Appliances on - for me, it was perfect as a server to both be a NAS and host virtual machines.

The 2U form factor means it's got a bit more space to work with than a 1U server and less shrill fans - though it lives in the basement, so the fan noise is really not too much of a worry as long as it doesn't sound like it's trying to take off down there.

I went off to Micro Center and got five 16TB hard drives to start filling out some of the front bays, as well as an SSD for the main system drive, and I also switched the hardware RAID controller out for a HBA card (they're only $20 on eBay and you'll make your ZFS raid system actually reliable), and then installed TrueNAS Scale.

The One True(NAS) Way

TrueNAS versus a commercial NAS offering is a relatively easy pick for me as someone who's still a competent sysadmin - if you want to get a comparable twelve-bay offering from Synology, say, you're looking at somewhere in the region of $10,000, and TrueNAS is the best of the breed in terms of "NAS software you install on your own system".

TrueNAS comes in two flavours these days, however - TrueNAS Core is the old, proven BSD-based variant, and TrueNAS Scale is the new, more featureful Debian-based variant. Both offer ZFS and RaidZ as their primary storage mechanism, and via some seriously dark magic you can upgrade in-place (!) from Core to Scale, but not the other way around.

If I was just doing a plain NAS, I'd have gone with Core, but since I wanted to run VMs and other services (like Plex), I went with Scale - not only does it have a more advanced KVM-based virtual machine solution, but it also has a built-in Kubernetes cluster and charts for common software like Plex. I'd installed it on an old HP Microserver and played around with it in the week that it took the R730xd to get here, and I liked what I saw.

Some people install TrueNAS on a virtualisation platform like ESXi and forward the HBA card's PCI address through, but I didn't really see the need; I don't have any wild virtualisation needs, and TrueNAS still has the ability to do things like pass GPUs through to its VMs, so I don't need another layer just to run VMs on.

Once I had the NAS set up and the long SMART tests running on my new disks, I set about making a virtual machine to be my development VM. The plan - one virtual machine, located on a machine more powerful than any laptop or desktop I own, and on my house's gigabit fibre internet link.

I chose Debian - I have nearly always been a Debian/Ubuntu guy apart from my

old dabblings with Gentoo, and I'm not a fan of where Ubuntu is right now with

forcing snap, so back to the old reliable Debian it was. My dotfiles are

already in a git repo, so syncing those down and getting my nice shell prompt

and aliases was a breeze.

Among other things, I have aliased git pus to git push, because I

got far too used to Mercurial's willingness to take unambiguous prefixes of

command names and I'm also too stubborn to change.

It's Like The Cloud, But At Home

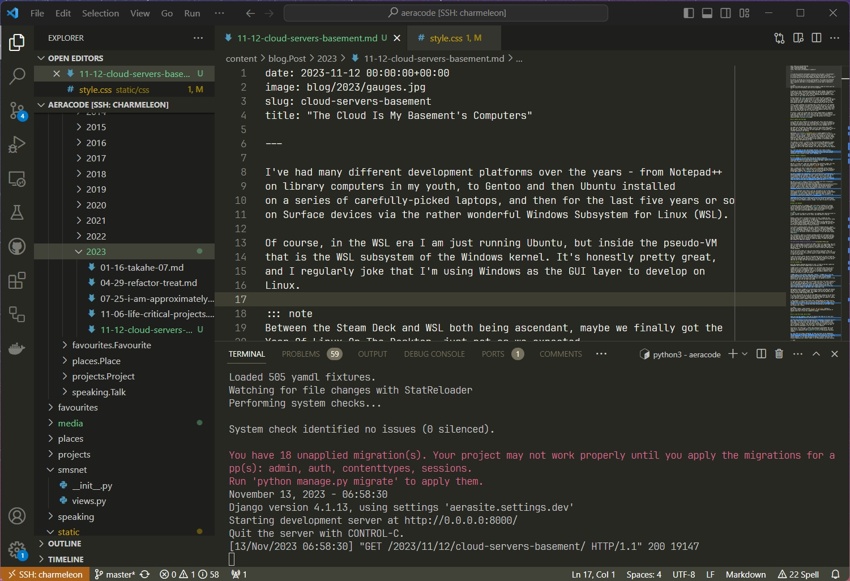

There are two key elements I have yet to mention that make all of this work - VS Code's SSH Remoting feature, and Tailscale.

You know that seamless VS Code integration with Codespaces I mentioned earlier? Well, they offer pretty much the same experience - including automatic port forwarding - with any server that you can SSH into. The WSL extension works very similarly; VS Code is just very good at having its frontend in one place and its backend in another, and offers you a multitude of ways to do that. I have to give its developers a lot of credit - it's seamless.

Tailscale is the other part - rather than exposing my development VM to the wider world, I can instead have a Tailscale client running on it, and then connect to it seamlessly from anywhere in the world from another Tailscale computer.

So, with those two things configured, VS Code just boots up and immediately

SSH remotes to my dev machine charmeleon (the NAS it runs on is, of course,

called charizard), and opens the last project I was working on. If I want to

switch projects, it's as easy and quick as doing it locally. The ports all

forward themselves and I almost forget I'm not developing on my local machine.

Pokémon are a great naming choice for servers because they each have an associated Pokédex number you can use for the IP address. That's my excuse, anyway.

Even better, it's one machine and one context - all three computers I mentioned before just need VS Code installed and I can develop on them and have the same context, database and incoming routing as anywhere else. I even have a Windows Terminal profile set up that just opens a direct SSH shell to the server, as if it was a local window. (Using the native Windows SSH client, which I am still sort of astonished is a thing!)

I even have a Samba share set up so that the dev machine's home directory is

available as the H: drive, so if I want to go and edit some images, I can

just pop over and open in them in a normal image editor. And, let me tell you,

I am very glad that I am running Samba over Tailscale, rather than the open

Internet.

In Conclusion

I've been working this way for a week now, and it's already been a huge quality of life improvement in how I can work on projects. Having a single place where my Takahē development copy can live has been especially helpful.

Are there downsides? Yes - the obvious one is that you need an internet connection to work on code, though with the amount that I search for things that you'd think I'd know by this point, that was basically a requirement anyway. Plus the Surface Pro 9 has a built-in 5G modem that I have a Google Fi SIM Card in, so it gets internet basically anywhere on Earth (as well as at low altitudes above it).

I'm sure that the latency from, say, Australia will not be great, but editing in VS Code means you're far less latency-sensitive than using something like VIM over plain SSH - all the text editing is still happening locally, and just the file saving, formatting, and terminal interaction is forwarded to the remote server.

There's also the running costs - based on my power metering, the R730XD is costing me about an additional $0.02 per hour to run compared to the old NAS. That's around $14 a month, which in my opinion is pretty great for a rack-mount server, is cheaper than colocating it in a proper datacentre, and is a lot less than I'd spend on Codespaces for the amount of RAM and CPU I'd need each month.

Another great thing about commercial servers? Built-in power metering. I can log in and see that it's idling right now at 140W.

This blog post was written on three machines - I started it at my workshop, did most of the writing in my office, and then copy-edited it from my sofa while watching some TV. It's been great.

Talking about this on Mastodon, I'm obviously not the only person doing something like this - but I am here to spread the good word and mention how specifically good VS Code is at doing this. Is this just the thin client fad come around again? Oh, of course it is, but in a world where internet connectivity is increasingly ubiquitous and reliable, the time seems right for it.

And the R730xd? It's a fantastic server and such a massive speed upgrade over my old NAS. Plex doesn't even sweat transcoding on the fly now, I still have about 50GB of free RAM even with the ZFS cache using loads up, there's still seven spare drive bays, and TrueNAS continues to have a great power-user UI.

About the only thing I need to do is to go get an older Nvidia GPU accelerator card to stick it in for things that want CUDA; we now live in the world of image diffusion and large language models after all, and given it's now winter here in Colorado, my basement could use the extra heat.

Andrew can be reached on Mastodon or email at andrew@aeracode.org